AI and the Battle for Communication

by Ryan McGuigan

The Reshaping of Human Communication

A crisis is more than a challenge – it is a moment of reckoning, a pivotal point at which action must be taken before irreversible damage occurs. We are currently facing such a crisis with Artificial Intelligence (AI), specifically in its role in reshaping human communication. This is not a crisis of technological capability – AI is already a highly advanced tool – it is a crisis of control and intent. AI is no longer merely assisting in communication; it is fundamentally altering how we receive, process, and engage with information.

This crisis is perhaps most clearly observed in the AI-driven systems that now govern our daily interactions with media, news, and information. Algorithms control what we see on social media platforms, search engines determine what we find online, and AI-generated content increasingly populates our news feeds. These AI systems are powerful, and they are not neutral. They are guided by the interests of the few corporations and entities that control them, not by the desire to promote truth, fairness, or public good.

At the Munich Security Conference, US Vice President J.D. Vance highlighted these risks, warning that AI companies now wield unprecedented power to shape political discourse. He cautioned that without appropriate oversight, AI-driven communication platforms could become powerful tools of political manipulation, subtly steering public opinion and suppressing dissent through algorithmic control. His concerns underscore the fundamental issue at hand: AI has become an arbiter of truth, yet it remains largely unregulated and unaccountable.

Vance also compared AI’s impact to that of the steam engine – a transformative force that defined an era. I share this view. Just as the Industrial Revolution reshaped economies and societies, AI has the potential to revolutionise human communication and productivity. However, history has taught us that innovation, while vital, must be accompanied by responsible regulation. The Industrial Revolution brought immense progress, but without safeguards, it also led to exploitative labour practices and social upheaval. AI must be approached in the same way: we must embrace its potential while ensuring it serves society rather than disrupts it. Regulation is not about stifling progress but about directing it towards the common good.

Self-Regulation and the Arbiter of Truth

The most immediate danger we face is the tendency of AI-driven platforms to self-regulate. The companies controlling these AI systems, such as social media giants and search engines, claim to have the best interests of society at heart. Yet, their actions reveal a different story. These corporations are primarily driven by profit, not truth. Their algorithms are designed to maximise engagement, not to provide accurate, balanced, or unbiased information. In practice, this means prioritising sensational headlines, controversial opinions, and emotional responses, often at the expense of truth.

Take, for example, the rise of AI-generated content. Deepfakes – video and audio that can convincingly impersonate real individuals – have already been used to spread misinformation and manipulate political discourse. AI-written articles, speeches, and synthetic voices are becoming more common, making it increasingly difficult for the average person to discern what is real and what is fabricated. This presents an existential threat to democracy itself, as trust in the media and public information collapses when the distinction between fact and fiction is no longer clear.

The problem is not just that AI can generate misinformation, it’s that it’s rewarded for doing so. Algorithms are designed to maximise engagement, and false or sensational content often performs best. In this system, misinformation becomes profitable, not accidental. Since false or sensational content often generates more engagement than sober, fact-based information, these systems naturally prioritise such content. In effect, misinformation isn’t a bug, it’s a feature of systems optimised for virality. The result is an ecosystem where AI doesn’t just allow misinformation, it actively amplifies it because doing so aligns with the platform’s business incentives.

The Government Must Act: A Framework

The time for inaction is over. Without regulatory intervention, AI will continue to concentrate power in the hands of a few corporations, allowing them to shape public discourse to serve their own interests. If AI is left unchecked, the consequences are clear: truth will be distorted, as engagement-driven algorithms promote emotionally charged content over fact-based reporting, blurring the line between reality and fiction. Trust in institutions will erode as people will become increasingly unsure whether the information coming from governments, media, or public bodies has been curated or manipulated by opaque AI systems. And democracy itself will be undermined as citizens make decisions based on skewed or false narratives, weakening informed debate and enabling those with the most algorithmic power, not the most persuasive ideas, to dominate public discourse. The government must act now to put a framework in place that ensures AI is used for the common good, not for the benefit of a select few. Here’s how:

1. AI Transparency Laws

What the government must do: Introduce legislation requiring AI companies to disclose how their algorithms work and make AI-generated content easily identifiable. These laws should mandate transparency in how information is curated and presented to users.

Why this would work: Transparency would allow the public to understand how information is being filtered and manipulated. It would create accountability and help prevent the covert spread of misinformation, enabling individuals to make informed decisions about the content they consume.

Because without it: AI systems will continue to operate in secrecy, shaping the information landscape without any checks on their power. Misinformation will proliferate, and the public’s ability to distinguish fact from fiction will diminish.

2. Prohibit AI Censorship Without Public Oversight

What the government must do: Prevent AI companies from unilaterally deciding what constitutes “misinformation” or “hate speech” without the involvement of independent regulatory bodies. There must be a system of checks and balances to ensure that AI is not used to silence dissent or manipulate political discourse.

Why this would work: Public oversight would guarantee that AI does not become a tool of censorship or political control. Independent regulatory bodies could ensure that free speech is protected while still preventing the spread of harmful misinformation.

Because without it: AI platforms will continue to act as unaccountable gatekeepers of speech, suppressing opinions that do not align with their corporate or political interests. In a world where AI controls the narrative, democracy and free expression would be at risk.

3. Establishing Independent AI Ethics Boards with Enforcement Power

What the government must do: Establish independent AI ethics boards with real enforcement authority to regulate AI’s role in communication. These boards should be responsible for ensuring that AI is developed and deployed in ways that uphold ethical standards and public interests.

Why this would work: Independent ethics boards would ensure that AI systems are designed with human dignity and ethical principles in mind. They would hold corporations accountable for any harm caused by their AI technologies and ensure that these systems are used responsibly.

Because without it: AI systems would continue to evolve unchecked, with little regard for the ethical consequences of their design or deployment. The absence of an independent body to enforce ethical guidelines would lead to a free-for-all where profit is the only priority.

Some will argue that strong ethical oversight would slow AI innovation, especially when global competitors like China may not be held to the same standards. This is a legitimate concern. However, sacrificing ethics for speed risks long-term harm, not just to individuals but to the very legitimacy of democratic societies. A race to the bottom, where profit and power override human dignity, would leave us with powerful systems that are unaccountable and unsafe. Instead, we must lead by example, demonstrating that innovation and integrity are not mutually exclusive. A robust, enforceable ethical framework is not a barrier to progress, but the foundation of a trustworthy AI future.

Conclusion: The Future of AI Must Serve the Common Good

So why hasn’t regulation happened yet? In part, governments have been slow to act because they lack both the technical understanding and the political will to confront powerful tech companies. In the United States especially, a mix of corporate lobbying, free-market ideology, and partisan gridlock has stalled meaningful oversight. It may take a major public scandal or clear threat to national security for serious regulation to begin, but waiting for a disaster is a poor strategy.

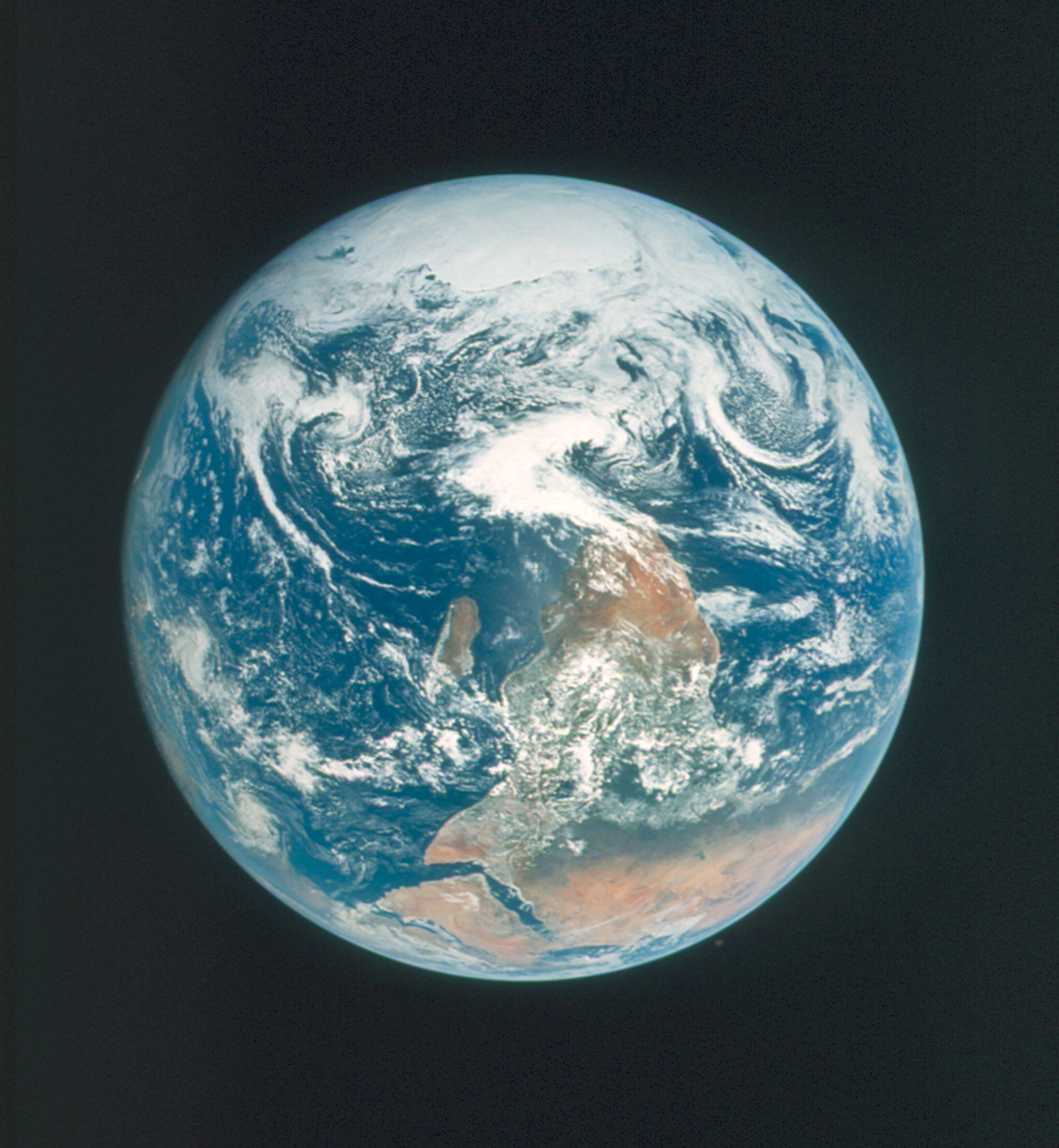

AI is neither inherently good nor evil – it is a tool, and like any tool, its impact depends on how it is used. The critical question we face today is whether AI will be used to serve humanity, or whether it will be used to reshape humanity to serve the interests of the few who control it.

The crisis is clear: AI is reshaping communication in ways that undermine democracy and public trust. But this does not have to be the future. Through decisive action, governments can ensure that AI serves the common good, fostering transparency, accountability, and fairness in the information ecosystem.

The time to act is now. Without regulation, AI will continue to concentrate power, manipulate discourse, and erode the foundations of free societies. But with the right regulatory framework, AI can be a force for good—a tool that enhances communication, promotes truth, and strengthens democracy. The choice is ours: we can either let AI control us, or we can take control of AI to ensure that it serves the best of humanity.

The future of AI is still unwritten – but the path we choose today will define its impact on future generations. The time for action is now.

Latest Articles

- The Neglected Value of BreastfeedingOn the benefits of breastfeeding

- Architecture Without a FutureOn architectural historicism

- The Absurdity of the EverydayOn seeking and creating meaning

- Bridging Science and SocietyOn interdisciplinary collaboration

- Renewable Baseload PowerOn the future of energy

- AI and the Battle for CommunicationOn control of communication

- Operating in a Bitcoin WorldOn the new monetary order

- Beyond Political NostalgiaOn hope as a political force

- The New Age of CaesarsOn the end of liberal democracy

- Social Media: Five Stages of GriefOn reclaiming our attention

About the author

Ryan McGuigan is a parliamentary assistant at the Scottish Parliament and co-editor of CATALYST. He holds a degree in Politics from the University of Glasgow and edits ‘The Carriage Post’, a publication exploring politics, religion, and culture through independent, reflective commentary. His interests include Scottish political thought, journalism, and the intersection of faith and public life.

Updates

Sign up to the CATALYST Updates Substack to receive the monthly schedule for new articles, news on the physical print-run of CATALYST’s inaugural issue, and details on the upcoming CATALYST Conference.